NeuralNet::Adam Class Reference

#include <Adam.hpp>

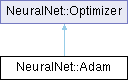

Inheritance diagram for NeuralNet::Adam:

Public Member Functions | |

| Adam (double alpha=0.001, double beta1=0.9, double beta2=0.999, double epsilon=10E-8) | |

| void | updateWeights (Eigen::MatrixXd &weights, const Eigen::MatrixXd &weightsGrad) override |

| This function updates the weights passed based on the selected Optimizer and the weights gradients. | |

| void | updateBiases (Eigen::MatrixXd &biases, const Eigen::MatrixXd &biasesGrad) override |

| This function updates the biases passed based based on the Optimizer and the biases gradients. | |

| template<typename Derived1, typename Derived2> | |

| void | update (Eigen::MatrixBase< Derived1 > ¶m, const Eigen::MatrixBase< Derived2 > &gradients, Eigen::MatrixBase< Derived1 > &m, Eigen::MatrixBase< Derived1 > &v) |

Public Member Functions inherited from NeuralNet::Optimizer Public Member Functions inherited from NeuralNet::Optimizer | |

| Optimizer (double alpha) | |

Additional Inherited Members | |

Protected Attributes inherited from NeuralNet::Optimizer Protected Attributes inherited from NeuralNet::Optimizer | |

| double | alpha |

Detailed Description

Adam optimizer

Constructor & Destructor Documentation

◆ Adam()

|

inline |

Adam is an optimization algorithm that can be used instead of the classical stochastic gradient descent procedure to update network weights iteratively.

- Parameters

-

alpha Learning rate beta1 Exponential decay rate for the first moment estimates beta2 Exponential decay rate for the second moment estimates epsilon A small constant for numerical stability

Member Function Documentation

◆ updateBiases()

|

inlineoverridevirtual |

This function updates the biases passed based based on the Optimizer and the biases gradients.

- Parameters

-

biases The biases that should be updated biasesGrad The biases gradient

The function will return void, since it only performs an update on the biases passed

Implements NeuralNet::Optimizer.

◆ updateWeights()

|

inlineoverridevirtual |

This function updates the weights passed based on the selected Optimizer and the weights gradients.

- Parameters

-

weights The weights that should be updated weightsGrad The weights gradient

The function will return void, since it only performs an update on the weights passed

Implements NeuralNet::Optimizer.

The documentation for this class was generated from the following file:

- /github/workspace/src/NeuralNet/optimizers/Adam.hpp

Generated by